Why use PowerShell for

configuring NIC Teaming?

Why is that?

First, you can use Windows PowerShell

to configure NIC Teaming on servers running the Server Core installation option

of Windows Server 2012 or later.

Why is that important?

Well, if you're thinking of deploying

new Windows servers into your environment, you should consider making them

Server Core machines rather than Server With A GUI machines. In fact, Microsoft

gives us a big fat hint pushing you in this direction because if you perform a

manual installation of Windows Server 2012 or later, the Server Core option is

automatically selected by default. Why should you choose the Server Core option

over the GUI one when you deploy Windows Server? Because Server Core machines

require less servicing and have a smaller attack surface since they have fewer

components installed and needing to be kept current with software updates.

The second reason of course for using

PowerShell is that it allows automation through scripting. This means you can

use PowerShell to script the creation and configuration of NIC teams across

multiple servers in your environment. Automation saves time and reduces error

when you have tasks you need to perform repeatedly.

Choosing the right teaming mode

Three teaming modes:

§

Static Teaming

§

Switch Independent

§

LACP

This is actually a bit confusing

because there are really only two types of teaming modes available:

§

Switch independent

§

Switch dependent

The UI doesn't display a mode called

Switch Dependent but instead displays two types of switch dependent modes:

§

Static Teaming

§

LACP

So your choices for teaming mode really

look like this:

§

Switch Independent

§

Switch Dependent

§

Static Teaming

§

LACP

When to use Switch Independent

teaming

Switch Independent is the default

teaming mode when you create a new team. This mode assumes that you don't need

your Ethernet switches to participate in making NIC teaming happen for your

server. In other words, this mode assumes the Ethernet switch isn't even aware

that the NICs connected to it are teamed.

Because of this, selecting Switch

Independent mode allows you to connect each NIC in a team to a different

Ethernet switch on your backbone. However, you can also use this mode when all

the NICs in the team are connected to the same Ethernet switch.

One scenario where you could use this

functionality is to provide fault tolerance in the case of one of the teamed

NICs failing. For example, you could create a team from two NICs (NIC1 and

NIC2) on your server and then connect NIC1 to SWITCH1 and NIC2 to SWITCH2. You

could then configure NIC1 as the active adapter for the team, and NIC2 as the

standby adapter for the team. Then if NIC1 fails, NIC2 will automatically

become active and your server won't lose its connectivity with your backbone.

Note that the above approach, which is

variously called Active/Passive Teaming or Active/Standby teaming, provides

fault tolerance but not bandwidth aggregation for your server's connection to

the network. You can just as easily leave both NICs configured as active and

get both fault tolerance and bandwidth aggregation if you prefer, but the

choice is up to you.

When to use Switch Dependent

teaming

You can only use one of the Switch

Dependent modes (static teaming or LACP) if all of the teamed NICs are

connected to the same Ethernet switch on your backbone. Let's consider

Static Teaming (also called generic

teaming or IEEE 802.3ad teaming) requires configuring both the server and

Ethernet switch to get the team to work. Generally only enterprise-class

Ethernet switches support this kind of functionality, and since you need to

manually configure it on the Ethernet switch it requires additional work to get

it working. Plus manually configuring something always increases the odds of

configuration errors happening if you make some kind of mistake during the

setting up process. If at all possible, try to stay away from following this

approach to NIC teaming on Windows Server.

LACP (also called IEEE 802.1ax teaming

or dynamic teaming, which should not be confused with the new Dynamic load

balancing mode available for NIC Teaming in Windows Server 2012 R2) uses the

Link Aggregation Control Protocol to dynamically identify the network links

between the server and the Ethernet switch. This makes LACP superior to static

teaming because it enables the server to communicate with the Ethernet switch

during the team creation process, thus enabling automatic configuration of the

team on both the server and Ethernet switch. But while most enterprise-class

Ethernet switches support LACP, you generally need to enable it on selected

switch ports before it can be used. So LACP is less work to configure than

Static Teaming, but still more work to set up than switch independent teaming

which is the default option for Windows NIC Teaming.

Choose Switch Independent

teaming

The bottom line then is that Switch

Independent teaming is generally your best choice to select for the teaming

mode when creating a new NIC Team for two reasons. First, you don't need to

perform any configuration on the Ethernet switch to get it working. And second,

you can gain two kinds of fault tolerance:

§

Protection against the

failure of a NIC in the team

§

Protection against the

failure of an Ethernet switch connected to a teamed NIC (when you are

connecting different teamed NICs to different Ethernet switches)

However, there are a couple of

scenarios described later on below where Switch Dependent teaming might be the

best choice if your Ethernet switches support such functionality and you're up

to configuring it.

Choosing the right load

balancing mode

Let's say you've chosen Switch

Independent teaming as the teaming mode for a new NIC team you're creating.

Your next decision is which load balancing mode you're going to use. As

described in the previous article in this series, NIC Teaming in Windows Server

2012 supports two different load balancing modes: Address Hash or Hyper-V Port.

Windows Server 2012 R2 adds a third option for load balancing mode called

Dynamic, but at the time of writing this article there are few details on how

it works so we'll revisit it later once more information becomes available. And

if you're server is running Windows Server 2012, this load balancing mode isn't

an option for you anyways.

When to use Address Hash

The main limitation of this load

balancing approach is that inbound traffic can only be received by a single

member of the team. The reason for this has to do with the underlying operation

of how address hashing uses IP addresses and TCP ports to seed the hash

function. So a scenario where this could be the best choice would be if your

server was running the kind of workload where inbound traffic is light while

outbound traffic is heavy.

Sound familiar? That's exactly what a

web server like IIS experiences in terms of network traffic. Incoming

HTTP/HTTPS requests are generally short streams of TCP traffic. What gets

pumped out in response to such requests however can include text, images, and

video.

When to use Hyper-V Port

This load balancing approach

affinitizes each Hyper-V port (i.e. each virtual machine) on a Hyper-V host to

a single NIC in the team at any given time. Basically what you get here is no

load balancing from the virtual machine's perspective. Each virtual machine can

only utilize one teamed NIC at a time, so maximum inbound and outbound

throughput for the virtual machine is limited to what's provided by a single

physical NIC on the host.

When might you use this form of load

balancing? A typical scenario might be if the number of virtual machines

running on the host is much greater than the number of physical NICs in the

team on the host. Just be sure that the physical NICs can provide sufficient

bandwidth to the workloads running in the virtual machines.

Other options

If you implement NIC teaming on a

Hyper-V host and your virtual machines need to be able to utilize bandwidth

from more than one NIC in the team, then an option for you (if your Ethernet

switch supports it) is to configure LACP or Static Teaming as your teaming mode

and Address Hash as your load balancing mode. This is possible because it's how

you configure the Ethernet switch that determines how traffic will be

distributed across the team members. This scenario is kind of unusual however

because typically if you have a virtualized workload that requires high bandwidth

for both inbound and outbound traffic (such as a huge SQL Server database

workload) then you're probably only going to have one or two virtual machines

running on the Hyper-V host.

Then there's the matter of implementing

NIC teaming within the guest operating system of the virtual machine as opposed

to the underlying host's operating system. But we'll leave discussion of that

topic until the next article in this series, and after that we'll start

examining how Windows PowerShell can be used to configure and manage NIC

teaming on Windows Server 2012 or later.

Considerations for physical

servers

If you simply create a NIC team from a

couple of on-board Gigabit network adapters in one of your physical servers,

you probably won't have any problems. Windows NIC Teaming will work just like

you expect it to work.

But if you've got a high-end server

that you've bought a couple of expensive network adapter cards for, and you're

hooking the server up to your 10 GbE backbone network, and you want to ensure

the best performance possible while taking advantage of advanced capabilities

like virtual LAN (VLAN) isolation and Single-Root I/O Virtualization (SR-IOV)

and so on, then Windows NIC Teaming can be tricky to set up properly.

Supported network adapter

capabilities

To help you navigate what might

possibly be a minefield (after all, if your server suddenly lost all

connectivity with the network your job might be on the line) let's first start

by summarizing some of the advanced capabilities fully compatible with Windows

NIC Teaming that are found in more expensive network adapter hardware:

§

Datacenter bridging (DCB)

- An IEEE standard that allows for hardware-based bandwidth allocation for

specific types of network traffic. DCB-capable network adapters can enable

storage, data, management, and other kinds of traffic all to be carried on the

same underlying physical network in a way that guarantees each type of traffic

its fair share of bandwidth.

§

IPsec Task Offload -

Allows processing of IPsec traffic to be offloaded from the server's CPU to the

network adapter for improved performance.

§

Receive Side Scaling

(RSS) - Allows network adapters to distribute kernel-mode network processing

across multiple processor cores in multicore systems. Such distribution of

processing enables support of higher network traffic loads than are possible if

only a single core is used.

§

Virtual Machine Queue

(VMQ) - Allows a host’s network adapter to pass DMA packets directly into the

memory stacks of individual virtual machines. The net effect of doing this is

to allow the host’s single network adapter to appear to the virtual machines as

multiple NICs, which then allows each virtual machine to have its own dedicated

NIC.

If the network adapters on a physical

server support any of the above advanced capabilities, these capabilities will

also be supported when these network adapters are teamed together using Windows

NIC Teaming.

Unsupported network adapter

capabilities

Some advanced networking capabilities

are not supported however when the network adapters are teamed together on a

physical server. Specifically, the following advanced capabilities are either

not supported by or not recommended for use with Windows NIC Teaming:

§

802.1X authentication -

Can be used to provide an additional layer of security to prevent unauthorized

network access by guest, rogue, or unmanaged computers. 802.1X requires that

the client be authenticated prior to being able to send traffic over the

network switch port. 802.1X cannot be used with NIC teaming.

§

Remote Direct Memory

Access (RDMA) - RDMA-capable network adapters can function at full speed with

low latency and low CPU utilization. RDMA-capable network adapters are commonly

used for certain server workloads such as Hyper-V hosts, servers running

Microsoft SQL Server, and Scale-out File Server (SoFS) servers running Windows

Server 2012 or later. Because RDMA transfers data directly to the network

adapter without passing the data through the networking stack, it is not

compatible with NIC teaming.

§

Single-Root I/O

Virtualization (SR-IOV) - Enables a network adapter to divide access to its

resources across various PCIe hardware functions and reduced processing

overhead on the host. Because SR-IOV transfers data directly to the network

adapter without passing the data through the networking stack, it is not

compatible with NIC teaming.

§

TCP Chimney Offload -

Introduced in Windows Server 2008, TCP Chimney Offload transfers the entire

networking stack workload from the CPU to the network adapter. Because of this,

it is not compatible with NIC teaming.

§

Quality of Service (QoS)

- This refers to technologies used for managing network traffic in ways that

can meet service level agreements (SLAs) and/or enhance user experiences in a

cost-effective manner. For example, by using QoS to prioritize different types

of network traffic, you can ensure that mission-critical applications and

services are delivered according to SLAs and to optimize user productivity.

Windows Server 2012 introduced a number of new QoS capabilities including

Hyper-V QoS, which allows you to specify upper and lower bounds for network

bandwidth used by a virtual machine, and new Group Policy settings to implement

policy-based QoS by tagging packets with an 802.1p value to prioritize

different kinds of network traffic. Using QoS with NIC teaming is not

recommended as it can degrade network throughput for the team.

Other considerations for

physical servers

Some other considerations when

implementing Windows NIC Teaming with network adapters in physical servers

include the following:

§

A team can have a minimum

of one physical network adapter and a maximum of 32 physical network adapters.

§

All network adapters in

the team should operate at the same speed i.e. 1 Gpbs. Teaming of physical

network adapters of different speeds is not supported.

§

Any switch ports on

Ethernet switches that are connected to the teamed physical network adapters

should be configured to be in trunk mode i.e. promiscuous mode.

§

If you need to configure

VLANs for teamed physical network adapters, do it in the NIC teaming interface

(if the server is not a Hyper-V host) or in the Hyper-V Virtual Switch settings

(if the server is a Hyper-V host).

Considerations for virtual

machines

You can also implement Windows NIC

Teaming within virtual machines running on Hyper-V hosts in order either to

aggregate network traffic or to help ensure availability by providing failover

support. Once again however, there are some considerations you need to be aware

of before trying to implement such a solution:

§

Each virtual network

adapter must be connected to a different virtual switch on the Hyper-V host on

which the virtual machine is running. These virtual switches must all be of the

external type--you cannot team together virtual network adapters that are

connected to virtual switches of either the internal or private type.

§

The only supported

teaming mode when teaming virtual network adapters together is the Switch

Independent teaming mode.

§

The only supported load

balancing mode when teaming virtual network adapters together is the Address

Hash load balancing mode.

§

If you need to configure

VLANs for teamed virtual network adapters, make sure that the virtual network

adapters are either each connected to different Hyper-V virtual switches or are

each configured using the same VLAN ID.

We'll revisit some of these considerations

for teaming physical and virtual network adapters in later articles in this

series. But now it's time to dig into the PowerShell cmdlets for managing

Windows NIC Teaming and that's the topic of the next couple of articles in this

series.

Examining the initial network

configuration

Let's use Windows PowerShell to examine

the initial network configuration of the server. The Get-NetAdapter cmdlet can

be used to display the available network adapters as follows:

We can use the -Physical option to make

sure these are physical adapters not virtual ones:

Here's another way of doing this:

We can use the Get-NetIPInterface

cmdelt to list all network interfaces that start with the word "NIC"

and verify they're using DHCP to acquire their IP addresses:

We can use the Get-NetIPAddress cmdelt

to display the IP addresses and subnet mask (via prefix length) assigned by

DHCP to each adapter:

PowerShell cmdlets for Windows

NIC teaming

NetLbfo is the name of the PowerShell

module for Windows NIC Teaming. You can use the Get-Command cmdlet to display a

list of all cmdlets in this module as follows:

We can use the Get-NetLbfoTeam to

verify that there is currently no team created: Get-NetLbfoTeam

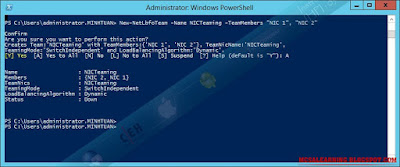

Creating a new team

The New-NetLbfoTeam cmdlet can be used

to create a new team. Here's how we could create a new team that includes both

physical network adapters on the server:

The above command illustrates the handy

use of the -WhatIf option which lets you see what a PowerShell command will do before

you actually execute it.

The figure shows that the status of

both the team and the adapter in it are both Active, so let's run Get-NetLbfoTeam

to get more info about the new team:

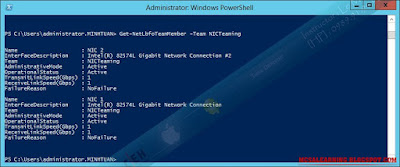

Let's use the Get-NetLbfoTeamMember

cmdlet to verify that our new team has two members in it:

We can also use Get-NetLbfoTeamNic to

get information about the interfaces defined for the team:

Removing a member from a team

Now let's use the

Remove-NetLbfoTeamMember cmdlet to remove a member from a team.

Let's check on the status of the

members of the team:

Adding a member to a team

Now let's use the Add-NetLbfoTeamMember

cmdlet to add our second network adapter to the new team. We'll use Whatiff

first to make sure we're doing it right:

Let's go ahead and add the second

adapter to the team:

Again it looks like something went

wrong, so let's go into the GUI and see what our team looks like now:

Everything looks good so let's run

Get-NetLbfoTeam again to get more info about the team:

The Status of the team is Up, so everything

is OK. Let's check on the status of the members of the team:

Let's also see what we can find out

about the interface for the team:

Configuring failover

Now let's say that we've decided to

configure our team for failover instead of link aggregation. Specifically, the

team will use the "Ethernet" network adapter by default and if

"Ethernet" fails then the "Ethernet 2" network adapter will

take over to ensure availability for our server on the network. We can do this

by using the Set-NetLbfoTeamMember cmdlet as follows:

Now let's do it:

We can use Get-NetLbfoTeamMember to

verify the configuration change as follows:

Note that the operational status of the

"NIC 2" network adapter shown above displays as Standby. The GUI shows

us the same thing:

To test this, we'll now disconnect the

network cable from the "NIC 1" adapter (the active adapter) and see

what happens to the "NIC 2" adapter (the standby adapter) as a result

of our action. Here's what Get-NetLbfoTeamMember shows after performing this

action:

Note that "NIC 2" now shows

up as operationally active, so failover has indeed occurred and can be verified

in the GUI:

Now let's reconnect the network cable

to the "NIC 2" adapter and see what happens. Here's what the GUI

shows immediately after performing this action:

A few seconds later the GUI looks like

this:

Notice that it takes a few seconds for everything

to work again.

Let's change team member "NIC

2" from standby back to active so we can use the team for link aggregation

instead of failover:

One final thing: let's run ipconfig and

see what the IP address configuration of the two physical network adapters on

our server look like after creating a team from them:

Notice that instead of displaying our

two adapters the ipconfig command displays the IP address configuration of the

team that was created from the adapters. Notice also that the IP address of the

team is the same as what the primary team member ("Ethernet") had as

its configuration before the new team was created. When you create a new team,

one of the team members is assigned the role of primary team member like this,

and the MAC address for the team is the MAC address of the network adapter used

for this primary team member. This is useful knowledge if you ever need to

perform a network trace to troubleshoot an issue involving a NIC team.

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

► Download this video, lesson for FREE

No comments:

Post a Comment